Defending Against Backdoor Attacks through Causality-Augmented Diffusion Models for Dataset Purification

Image credit: Unsplash

Image credit: UnsplashAbstract

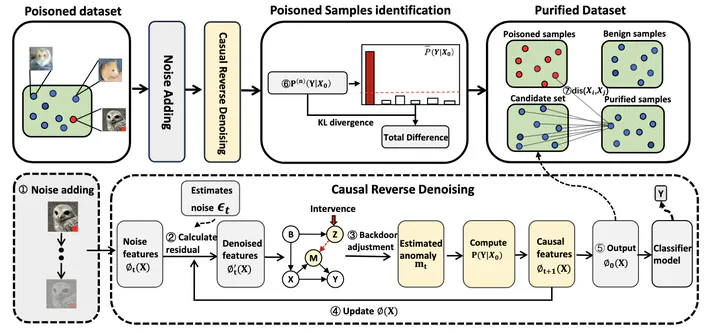

Dataset-Based Defense is a common proactive defense method aimed at countering backdoor attacks by filtering and removing poisoned samples from the training dataset. Recent research has utilized diffusion models to purify contaminated samples. However, during both forward and backward denoising processes, the inherent noise and distribution characteristics of the data can also affect the purification of poisoned samples, making the process unreliable. To address this issue, this paper re-examines the purification process from the perspective of causal graphs, revealing how backdoor triggers and noise act as confounding factors, generating false associations between contaminated images and labels. To eliminate this false association, we propose a Causality-Augmented Diffusion Model-Based Defense (CADMBD) method. CADMBD applies multiple iterations of forward noise addition and backward denoising to the data input in the diffusion model. During the backward denoising process, residual calculation and causal layer adjustment are used to eliminate backdoor pathways, thereby establishing a true causal relationship between benign features and labels. Finally, the most suitable generated image is selected as the final purified image based on pixel and feature distances from each iteration. Experimental results demonstrate that CADMBD effectively addresses various complex attacks, significantly enhancing the purification effect while minimizing the impact on benign sample accuracy, outperforming baseline defense methods.

Type

Publication

2024 IEEE 23rd International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom)